Image super-resolution reconstruction uses a specifific algorithm to restore the low resolution

blurred image in the same scene to a high resolution image.In recent years, with the vigorous development

of deep learning,this technology has been widely used in many fifields.In the fifield of image super-resolution

reconstruction,more and more methods based on deep learning have been studied. According to the principle

of GAN,a pseudo high-resolution image is generated by the generator, and then the discriminator calculates

the difference between the image and the real high-resolution image to measure the authenticity of the image.Based on SRGAN (super resolution general adverse network),this paper mainly makes three improvements.

First, it introduces the attention channel mechanism, that is,it adds Ca (channel attention) module to SRGAN

network,and increases the network depth to better express high frequency features; Second, delete the

original BN (batch normalization) layer to improve the network performance; Third, modify the loss function

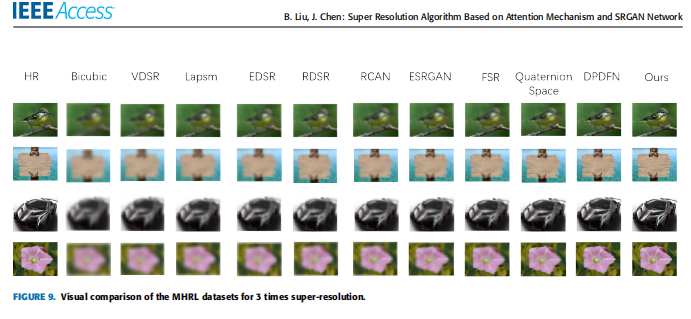

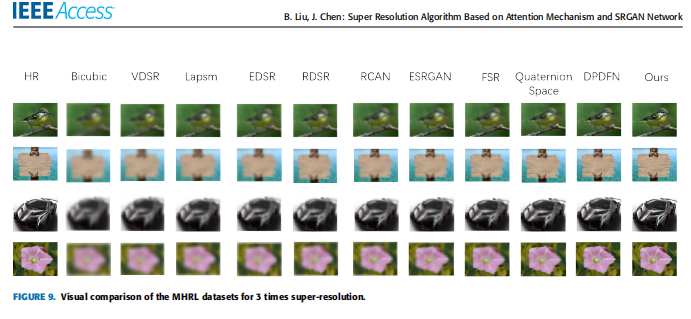

to reduce the impact of noise on the image. The experimental results show that the proposed method is

superior to the current methods in both quantitative and qualitative indicators,and promotes the recovery of

high-frequency detail information.The experimental results show that the proposed method improves the

artifact problem and improves the PSNR (peak signal-to-noise ratio) on set5,set10 and bsd100 test sets.

In this paper, based on SRGAN super-resolution network,artifacts are often produced in high-frequency details,and attention channel network is introduced. At the same time,because the deep learning convolutional neural network is too

deep, the training time is too long and the super-resolution

effificiency is low. In the discrimination model, the original discriminator of SRGAN is used to guide the training of

super-resolution model. In the loss function, L1 loss function

is used to further improve the image super-resolution visual

effect. On the set5, set14 and bsd100 datasets, the PSNR

is improved by 0.72db, 0.60db and 1.42db, respectively,compared with the SRGAN reconstruction results. However,due to the use of L1 loss function, the high-frequency features

of the reconstructed image are ignored, which leads to the

difference between the reconstructed image and the real image in some details. At the same time, due to the depth of

the network, the calculation of the algorithm is too large,and

the training effificiency is not high enough. Next, we will study

how to further improve the effect of image reconstruction

from these two aspects.